Evaluate your experiment¶

For each training type (except image detection usecases) you will find the same navigation allowing you to explore your usecase and models.

image alt text¶

General : general information and comparison of your models in terms of performances

models : list view of the created models and information about the trained models

Features : information about the dataset used for the training and the configuration of the feature engineering

Prediction : create bulk predict using CSV files and view all bulk prediction done for this usecase

Task : DAG and listing of all operations done during training

Versions : list of all version of the selected usecase

Report : generate PDF reports explaining the models/usecases

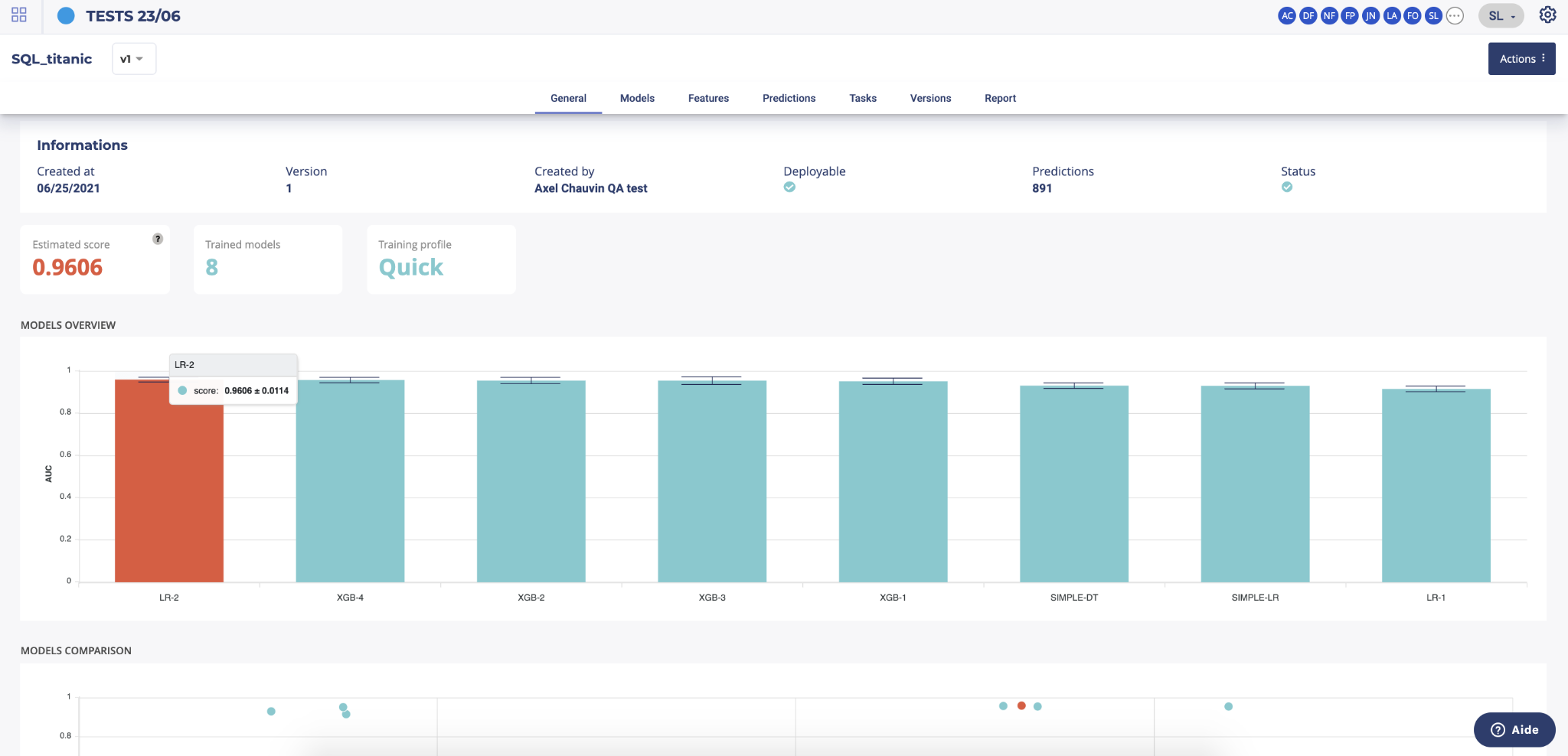

General¶

In this menu, you will find general information about your usecase such as the list of created models.

The information header gives you the important information regarding your usecase. You can expand this panel by clicking on “more information”. Please note that by default, by entering a usecase, the information will be all about the latest version of the usecase. You can navigate through the versions using the dropdown list on the left side of this panel.

Action button : on the right side of the information panel, you will find the actions buttons allowing you to :

edit the name and description of the usecase version

create a new version

duplicate the usecase

delete the usecase

Under the information panel, cards displaying information regarding your usecase are displayed. Please note that the holdout score card will be displayed only if a holdout was selected during training configuration

Two graphs are displayed on the general page of a usecase showing :

The models ranked by score. By clicking on a model chart bar, you can access to the selected model details

Models score vs. estimated prediction time

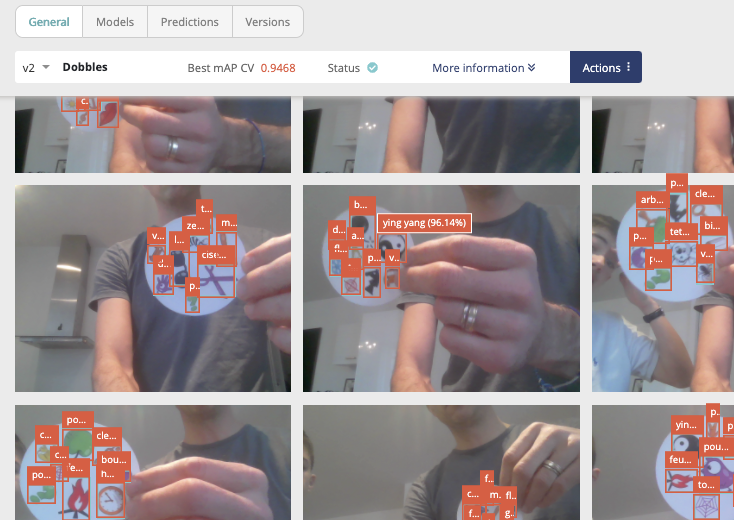

Please note that for object detection, the general screen is quite different from the other use cases types. On the image detection general menu you will find a sample of images used during the train in orange, the predicted bounding boxes using cross validation and in blue, the true bounding boxes.

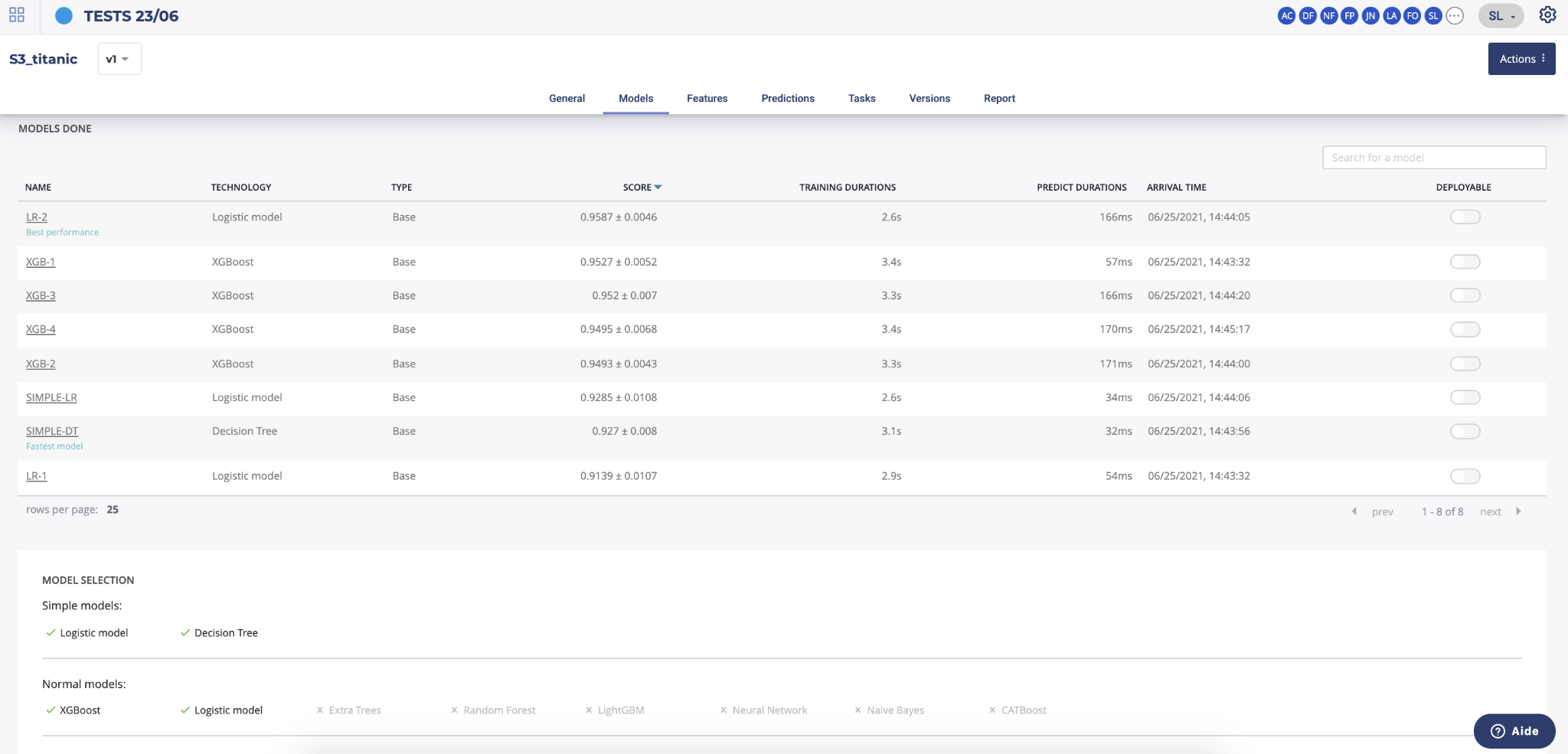

models¶

By clicking on the models menu of top usecase navigation, you will access the model list trained for this usecase version. you will also, at the bottom of the page, find information regarding the model selected for this train.

By clicking on the model name in the list, you will be redirected to the model detail page. Please note that a toggle button is available on the right side of the list for each model. This toggle allows you to tag a model as deployable. In order to know how to deploy a model, please go to the dedicated section.

features¶

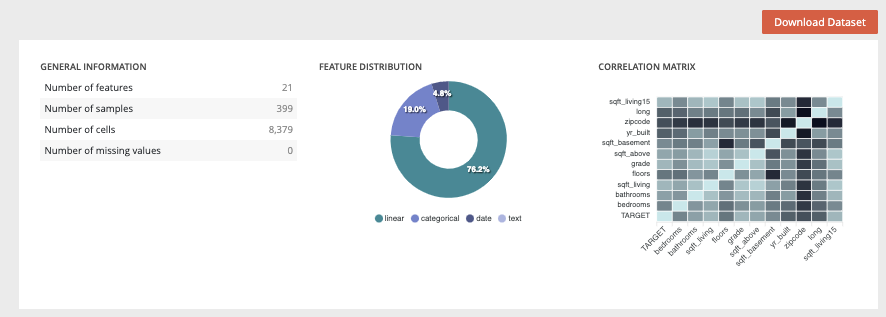

In this section you will find any information relative to the dataset used during the train.

On the top panel, you will find generic information about the dataset used during the train such as the number of columns, number of samples and number of cells or the usecases using this dataset.

You can also download the dataset used for the training by clicking on the “download dataset” button on top of the page.

Two graph are also displayed showing :

the feature distribution regarding the feature type (linear, categorial, date or text). This distribution is automatically calculated when uploading a dataset into the platform

correlation matrix showing the correlation coefficients between variables. Each cell in the table shows the correlation between two variables

Under this top panel, three tabs are available :

Features analysis : table displaying features information calculated after the upload of the dataset such as the % of missing value.

Drop features : In this tab, you will find a list of all features you dropped for the usecase training during the usecase configuration

Configuration : list of all feature engineering applied on the dataset during training

predictions¶

The predictions menu allows you to do bulk predictions using a previously loaded dataset and see holdout predictions made during training.

In order to do a new prediction, you have to first select a model from the dedicated dropdown list and then a dataset uploaded on the project. Then, by clicking on the “launch prediction” button, the system will compute and generate a prediction file downloadable by clicking on the right side button on the prediction list below.

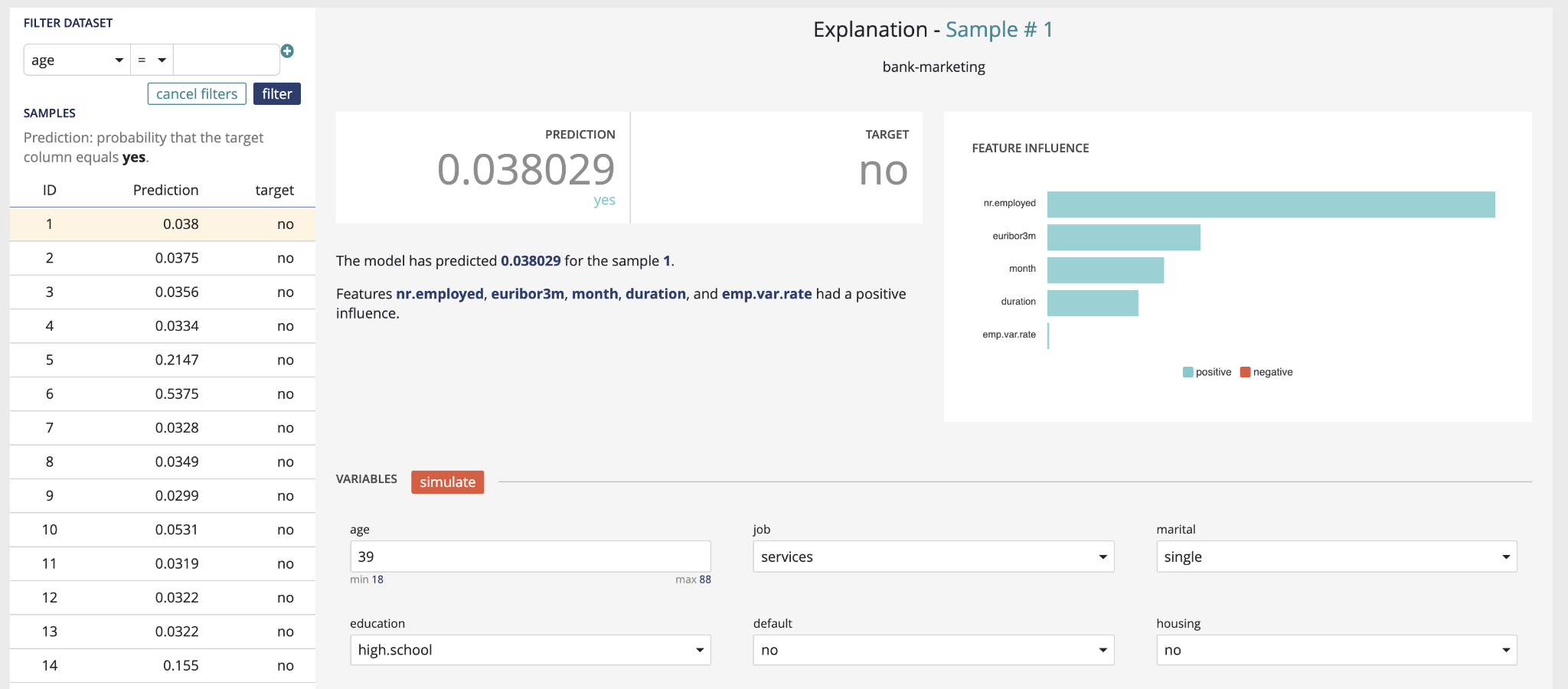

Prediction explain¶

You can make more in-depth analyses of the predictions made this way by generating explanations for each of the predictions of the dataset. You can access the explanation screen by clicking on the menu on the table entry for the prediction you wish to explain. (Depending on the complexity of the model and the size of your dataset, the screen may take a few seconds to load).

The explanation screen is composed of three parts :

The “filter dataset”, on the left, that allows you to select a specific prediction from your dataset to be explained, as well as apply specific filters to the dataset to select predictions you are interested in. A filter is defined as :

a variable present in your dataset (selected from the dropdown)

an operator

a value. All rows matching the clause will be returned. You can apply up to two filters, and select whether both filters should be applied (“and”), or if a row matching any of the two filters should also be returned (“or”).

The “explanation”, on the top right, displays the prediction for the currently selected line, the actual value of the target variable (if it was present in the dataset), as well as the explanation, shown as the relative impact (which can be positive or negative) of the different variables on the final decision.

The “variables” part, on the bottom right, allows you to conduct “what-if” analyses and see how the prediction and explanations can evolve when the values of the variables are adjusted. When you click on the “simulate” button, the prediction & explanation above will be updated.

tasks¶

In this menu you will find an overview of all tasks made by the platform during the usecase training and their status. The aim of this screen is to help you to better understand the operations made during the training and, if errors occurred, at which level it happened.

For that, two views are available :

Liste view : list all single operations done

DAG view : graphical view of single operations and their relation

You can switch between these views by clicking on the execution graph / tasks board tabs.

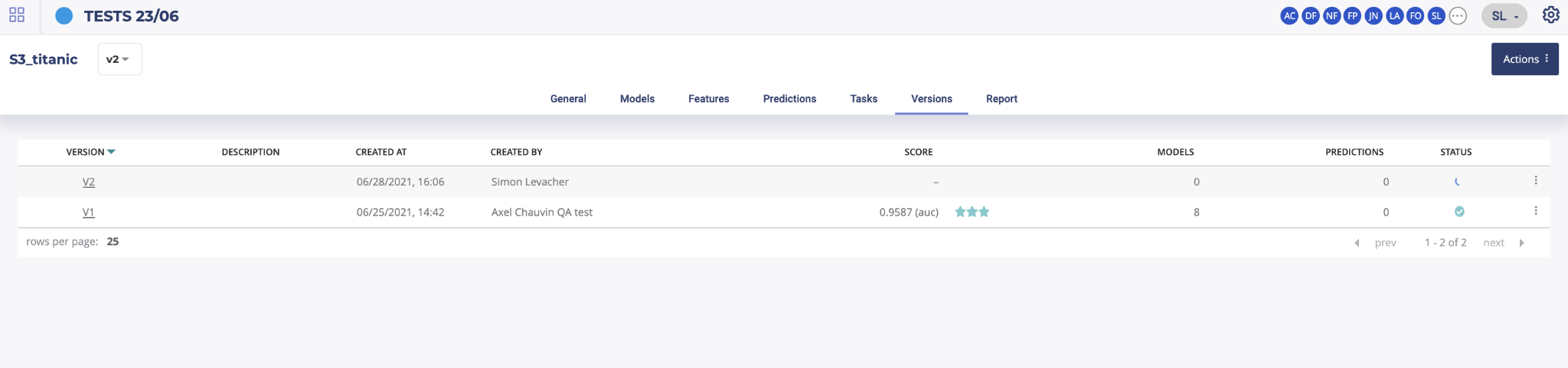

versions¶

In the Prevision.io platform you can iterate versions of your usecases. To do that, three possibilities :

On the usecase list of a project, by clicking on the right side action button of the usecase you want to iterate and select “new version”

On any pages of a usecase by clicking on the top right “actions” button and select new version

On the “Version” menu of a usecase, by clicking on the action button right side of a version listed and select “new version”

Then, on the version menu of a usecase, you will find the list of all trained versions for this usecase. By clicking on the version number, left side of this list, you will access the selected usecase version page. You can also navigate through versions by using the dropdown list top left of the information banner on any page of a usecase.

After clicking on a new version button, you will be redirected to the usecase configuration menu. The usecase version configuration you selected for your iteration will be automatically loaded in order for you to know what configuration was done and select the changement you want to apply.

TIPS : when creating a new usecase or a new version, add a description to your usecase at the first screen of new usecase configuration. It will help you finding the version you want to work with later.

report¶

In this menu, you can generate PDF reports regarding models from the usecase. To do that, once on the dedicated model menu, you will have to choose from the drop down the models you want to appear in the generated report and the feature importance count. You also can select explanations by check/uncheck the show explanation checkbox. Then, by clicking on the generate button, you will get an overview of the report. By clicking on the “print” button on the top of the overview, you will download the PDF report.

models pages¶

Each model page is specific to the datatype/training type you choose for the usecase training. Screens and functionality for each training type will be explained in the following sections. You can access a model page by two ways :

by clicking on a graph entry from the general usecase page

by clicking on a list entry from the models top navigation bar entry

Then you will land on the selected model page splitted in different parts regarding the training type.

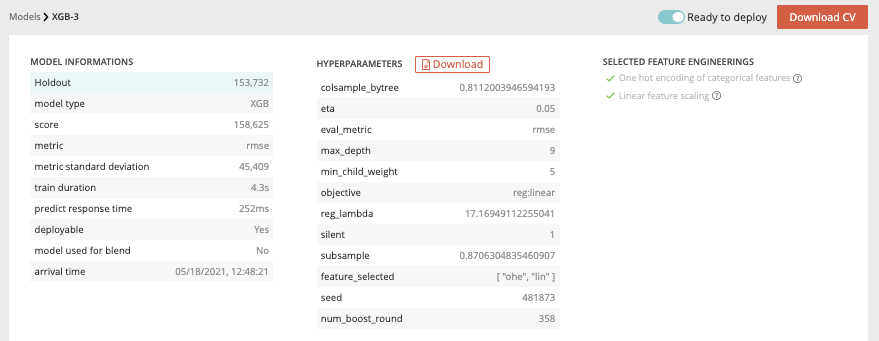

tabular usecases - general information¶

For each kind of tabular training type, the model general information will be displayed on the top of the screen. Three sections will be available.

Model information : information about the trained model such as the selected metric and the model score

Hyperparameters : downloadable list of hyperparameters applied on this model during the training

Selected feature engineerings (for regression, classification & multi-classification) : features engineerings applied during the training

Preprocessing (for text similarity usecases) : list of pre-processing applied on textual features

Please note that for following usecases types, the general information parts is different than from others :

Image detection usecases : no feature engineering

text similarity usecases : preprocessing are displayed instead of feature engineering

Model page - Graphical analysis¶

In order to better understand the selected model, several graphical analyses are displayed on a model page. Depending on the nature of the usecase, the displayed graphs change. Here an overview of displayed analysis depending on the usecase type.

Tabular regression |

Tabular classification |

Tabular multi-classification |

Tabular text similarity |

Time series regression |

Image regression |

Image classification |

Image multi-classification |

Image detection |

|

|---|---|---|---|---|---|---|---|---|---|

Scatter plot graph |

Yes |

No |

No |

No |

Yes |

Yes |

No |

No |

No |

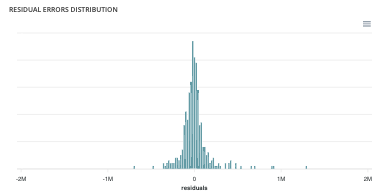

Residual errors distribution |

Yes |

No |

No |

No |

Yes |

Yes |

No |

No |

No |

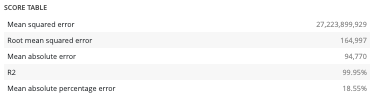

Score table (textual) |

Yes |

No |

No |

No |

Yes |

Yes |

No |

No |

No |

Residual errors distribution |

No |

No |

No |

No |

No |

No |

No |

No |

No |

Score table (overall) |

No |

No |

Yes |

No |

No |

No |

No |

Yes |

No |

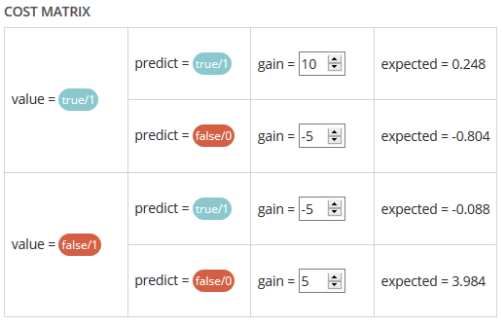

Cost matrix |

No |

Yes |

No |

No |

No |

No |

Yes |

No |

No |

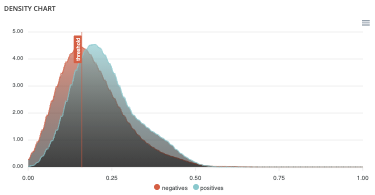

Density chart |

No |

Yes |

No |

No |

No |

No |

Yes |

No |

No |

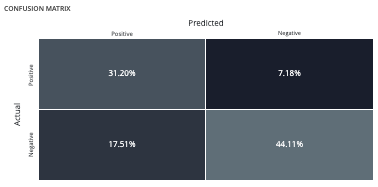

Confusion matrix |

No |

Yes |

Yes |

No |

No |

No |

Yes |

Yes |

No |

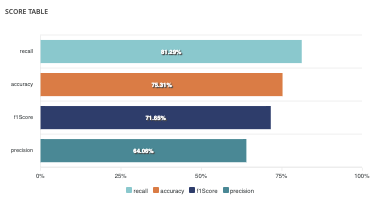

Score table (by class) |

No |

Yes |

Yes |

No |

No |

No |

Yes |

Yes |

No |

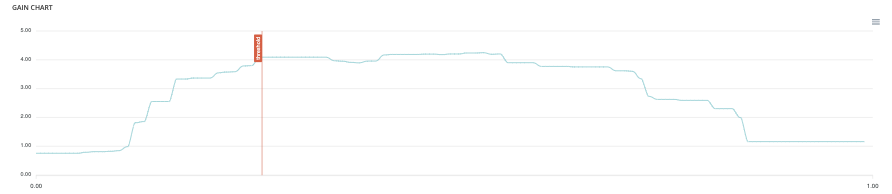

Gain chart |

No |

Yes |

No |

No |

No |

No |

Yes |

No |

No |

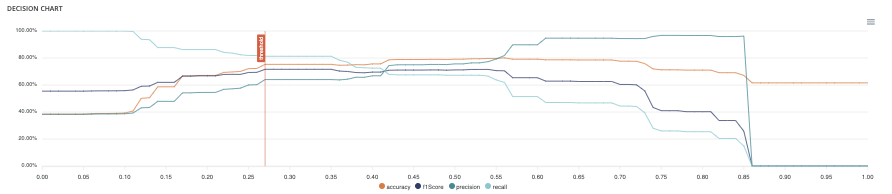

Decision chart |

No |

Yes |

No |

No |

No |

No |

Yes |

No |

No |

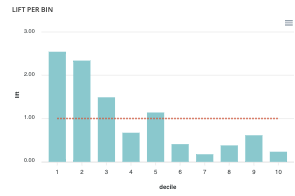

lift per bin |

No |

Yes |

No |

No |

No |

No |

Yes |

No |

No |

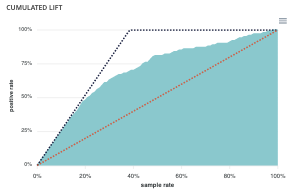

Cumulated lift |

No |

Yes |

No |

No |

No |

No |

Yes |

No |

No |

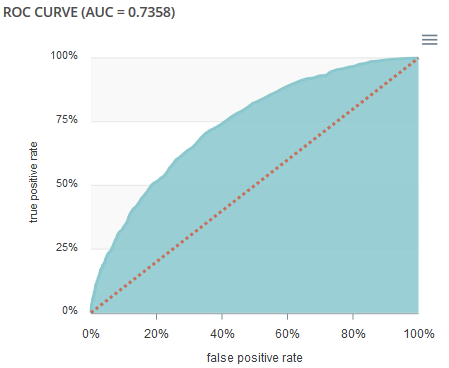

ROC curve |

No |

Yes |

Yes |

No |

No |

No |

Yes |

Yes |

No |

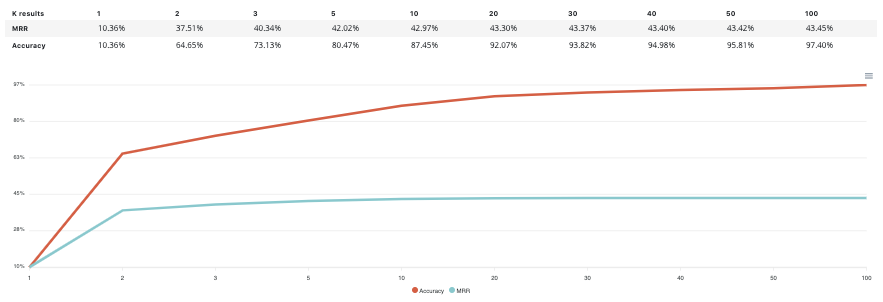

Accuracy VS K results |

No |

No |

No |

Yes |

No |

No |

No |

No |

No |

Model page - graphs explanation¶

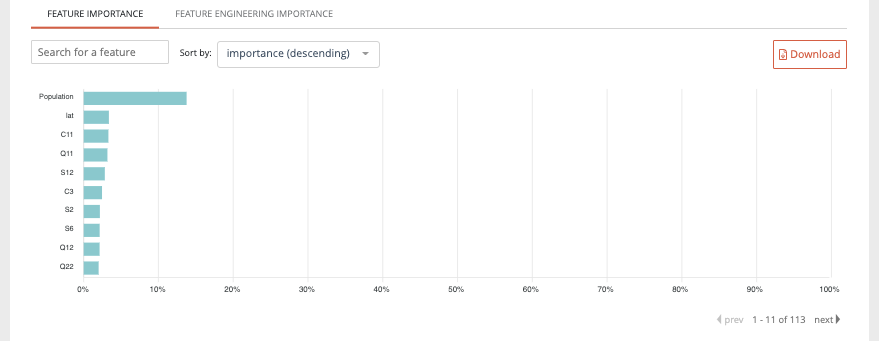

Then the feature graphs will be displayed (not for text similarity) allowing you to see the influence of features for the selected model. Two graphs are accessible through the two features tabs :

Feature importance : graph showing you the importance of the dataset features. By clicking on the chart, you will be redirected to the dedicated feature page.

Feature engineering importance : showing you the importance of selected feature engineering.

Please note that the feature importance graph also takes into account the feature engineering importance. For example, if a feature n°1 has not so much influence by itself regarding the model but, after feature engineering has a great influence, it will be represented on the feature importance graph.

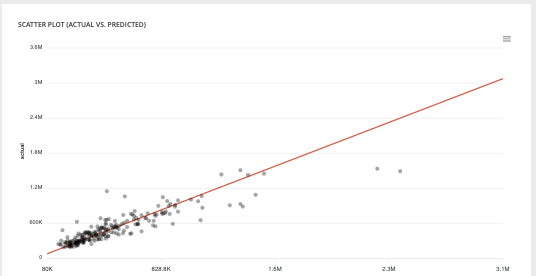

Scatter plot graph : This graph illustrates the actual values versus the values predicted by the model. A powerful model gathers the point cloud around the orange line.

Residual errors distribution : This graph illustrates the dispersion of errors, i.e. residuals. A successful model displays centered and symmetric residues around 0.

Score table (textual) : Among the displayed metrics, we have:

The mean square error (MSE)

The root of the mean square error (RMSE)

The mean absolute error (MAE)

The coefficient of determination (R2)

The mean absolute percentage error (MAPE)

Please note that you can download every graph displayed in the interface by clicking on the top right button of each graph and selecting the format you want.

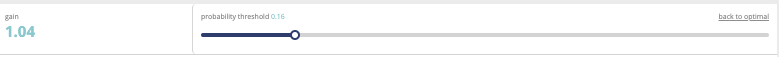

Slider : For a binary classification, some graphs and scores may vary according to a probability threshold in relation to which the upper values are considered positive and the lower values negative. This is the case for:

The scores

The confusion matrix

The cost matrix

Thus, you can define the optimal threshold according to your preferences. By default, the threshold corresponds to the one that minimizes the F1-Score. Should you change the position of the threshold, you can click on the « back to optimal » link to position the cursor back to the probability that maximizes the F1-Score.

Cost matrix : Provided that you can quantify the gains or losses associated with true positives, false positives, false negatives, and true negatives, the cost matrix works as an estimator of the average gain for a prediction made by your classifier. In the case explained below, each prediction yields an average of €2.83.

The matrix is initiated with default values that can be freely modified.

Density chart : The density graph allows you to understand the density of positives and negatives among the predictions. The more efficient your classifier is, the more the 2 density curves are disjointed and centered around 0 and 1.

Confusion matrix : The confusion matrix helps to understand the distribution of true positives, false positives, true negatives and false negatives according to the probability threshold. The boxes in the matrix are darker for large quantities and lighter for small quantities.

Ideally, most classified individuals should be located on the diagonal of your matrix.

Score table (graphical) : Among the displayed metrics, we have:

Accuracy: The sum of true positives and true negatives divided by the number of individuals

F1-Score: Harmonic mean of the precision and the recall

Precision: True positives divided by the sum of positives

Recall: True positives divided by the sum of true positives and false negatives

Gain chart : The gain graph allows you to quickly visualize the optimal threshold to select in order to maximise the gain as defined in the cost matrix.

Decision chart : The decision graph allows you to quickly visualize all the proposed metrics, regardless of the probability threshold. Thus, one can visualize at what point the maximum of each metric is reached, making it possible for one to choose its selection threshold.

It should be noted that the discontinuous line curve illustrates the expected gain by prediction. It is therefore totally linked to the cost matrix and will be updated if you change the gain of one of the 4 possible cases in the matrix.

lift per bin : The predictions are sorted in descending order and the lift of each decile (bin) is indicated in the graph. Example: A lift of 4 means that there are 4 times more positives in the considered decile than on average in the population.

The orange horizontal line shows a lift at 1.

Cumulated lift : The objective of this curve is to measure what proportion of the positives can be achieved by targeting only a subsample of the population. It therefore illustrates the proportion of positives according to the proportion of the selected sub-population.

A diagonal line (orange) illustrates a random pattern (= x % of the positives are obtained by randomly drawing x % of the population). A segmented line (blue) illustrates a perfect model (= 100% of positives are obtained by targeting only the population’s positive rate).

ROC curve : The ROC curve illustrates the overall performance of the classifier (more info: https://en.wikipedia.org/wiki/Receiver_operating_characteristic). The more the curve appears linear, the closer the quality of the classifier is to a random process. The more the curve tends towards the upper left side, the closer the quality of your classifier is to perfection.

Accuracy VS K results : this graph shows the evolution of accuracy and MRR for several value of K results